Are we who the algorithm thinks we are?

Identity construction in an algorithmic culture

To what extent does the algorithm’s conception of our identity influence our actual persona, public or private? Similarly, does our conception of the algorithm, and the identity we project onto it, indirectly influence how we see ourselves?

The first question may be a little easier to contemplate. If an algorithm believes we’re interested in rural survivalist content mixed in with some urban comedy, then that is what it will serve us, and in so far as we consume it, would we not start to emulate and gravitate towards that kind of identity?

There’s certainly agency in such a process of identity construction, but there’s also clearly an influence that the algorithm has in deciding which content to serve us. That interest doesn’t have to be exact, as long as we don’t close the app or leave the platform the algorithm has served its masters and we in turn serve the algorithm.

Yet that algorithm’s conception of who we are is not static, and responds to how we respond to the content on offer. That’s part of where the agency comes from, but also reflects how the algorithm’s construction of our identity tends to lead our own construction of identity. At least for many people.

Perhaps one way of expanding the agency involved, is as we noted in Monday’s issue, to personify the algorithm, and draw out it’s role and biases by giving that algorithm itself a sort of personality or identity.

With that in mind, let’s check out some interesting research via our friends at the Data & Society institute.

New on Points: @sophiehbishop & @TanyaKant1 provide an overview of their workshop on "algorithmic identities," which helps participant understand their personal relationship to data https://t.co/bs1GpLSi2a

— Data & Society (@datasociety) December 9, 2020

Our research workshop, “Algorithmic Autobiographies and Fictions,” is animated by the point that “everyday folks” are just as interested in how algorithms shape our world as those who study them academically and professionally. In this blog post, we provide an outline for researchers who want to run our practical workshops to understand how audiences conceive of their personal relationships with their data. The design of our workshop is informed by both of our research, which acknowledges that individuals develop theories and strategies to help them navigate these technologies, even when they are ostensibly “black boxed.” Sophie’s work looks at how influencers’ community conversation and gossip about platforms shapes the content that they produce and distribute. Tanya’s examines how the anticipation and perception of advertising personalization shapes users’ sense of selves. We want to understand how users think these processes draw from their identities, and in turn, shape them.

The work around gossip is particularly relevant as it reflects how knowledge abhors a vacuum. Just because the digital platforms choose not to disclose how their algorithms work, that doesn’t stop people from guessing or gossiping as to what is really going on. Anecdotes shared from experience or urban legends that claim origin in some engineer or developer.

While these are the makings of myth, they also reflect an overwhelming desire to understand how these systems work.

Which is what makes this workshop framework so potentially empowering. It provides people with the permission and process by which they can deduce and dissect their algorithmic world.

Titled “Algorithmic biographies and fictions,” these relaxed and generative workshops prioritize creative engagement with data. As we tell our participants, this is a “no tech workshop”—no experience with data is required. Instead, we use creative writing and drawing techniques to allow social media users to “meet and greet”’ their “algorithmic selves” through the ad preference profiles that social media platforms create about their users. The workshops have a pedagogical function: we use them as an opportunity to increase data and algorithmic literacies, forms of digital literacy that Henderson et al. note is currently in need of promotion with the wider public. Mostly though, we steer participants away from doom-laden prophesies of social breakdown and civil war. We hope instead that the workshop allows for dynamic engagement with social media data through creative writing and drawing in ways that increase user understanding and agency.

The workshop itself might be a worthwhile salon for us to run, with each of us going through the recommended exercise. Here’s the substance of it:

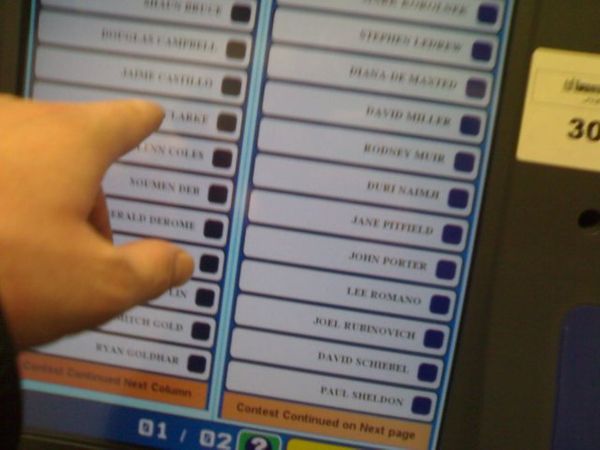

Next up, we give step-by-step instructions on how to access available ad preferences on various social media platforms. Using handouts, we usually show participants how to access their ad preferences on Google, Facebook, or Instagram. The pathways for accessing this information are adjusted regularly by platforms, so it’s important to revisit the instructions we give out prior to each workshop. If time allows, we invite participants to access their data for each social media and cross-check their results.

After asking participants to access their ad preference profiles on the platform of their choice, we ask them to jot down some notes about what “interest categories” stand out for them: if they have lots or little listed interests, how they feel about what has been inferred about them, and how this will affect what they draw/write. We then move on to the first exercise: drawing your “algorithmic self.” Promoted by questions such as “What do they look like? What is their age and gender, if any?”, we ask participants to draw a picture. We stress that no artistic skill is required and that their “algorithmic selves” will most likely reflect only a fraction of their listed interests: there are usually too many to encompass them all in one picture (an important pedagogical point on data tracking in and of itself).

We then move on to the creative writing exercise: Sophie leads with a list of her ad preferences from Instagram, writing a short story that she reads as an example. We then ask participants to write a short story, poem, or play (or any format of writing they like) describing a “day out” with their digital self. Prompted by questions such as “What did you ask your algorithmic self? What did they say to you? What did they want to do?”, we ask participants to write for around 15 minutes.

If this sort of workshop would be of interest to you, then please post a comment on this post. ;)

Overall it is a smart means of externalizing both the algorithmic profile but also the process that goes into constructing it. The result is not just heightened literacy but also a greater desire for accountability or responsibility. While this helps us mock our algorithmic self, it should also foster the desire to modify if not claim ownership of our algorithmic self.

Do we own and control our digital shadow? Presently, no. Yet perhaps that should be so?

When it is our shadow, while abstract, it still seems reasonable, as it is both external to us, and yet created by us, or by our actions.

Yet things really get surreal when we ponder the existence of the self itself. Who are we and how did we come to be? Biologically we may believe we have the answer, but culturally that is increasingly difficult to comprehend.

GhostWriter a new literary genre:the #algorithmic #autobiography : who is writing your autobiography? #streamingegos pic.twitter.com/O2uucgkVMb

— Salvatore Iaconesi (@xdxd_vs_xdxd) January 15, 2016

It’s a fair question to ask yourself: who is writing your autobiography? It would be a mistake to believe that it is not being written just because you are not (aware of your role in) writing it. In a surveillance society your autobiography is written automatically.

Algorithmic Autobiography: a fundamental article on Art is Open Source https://t.co/rqQOzOE0P8 #self #identity #data pic.twitter.com/NZoe0t7twY

— Salvatore Iaconesi (@xdxd_vs_xdxd) February 16, 2016

What is an autobiography?

Are we writing an autobiography by leaving our traces on social networks, every day? What about the large quantities of other digital traces we leave behind in our lives?

What happens when non-human and algorithmic subjects/entities come into play, increasing the complexity of our interactions and influencing the process of construction and perception of the self?

We progressively expose ourselves more, every day, consciously and unconsciously, with consequences that are difficult to grasp: about time, identity, memory, rights.

For the Streaming Egos project we have decided tackle this topic and analyse the uncertain territories/boundaries of the “I” and “self” asking ourselves who (and/or what) is the author of our autobiographies in the hyper-connected and syncretic world we live in.

This article is an attempt to summarize and put together the elements emerged from the process.

Rather than conclusive answers, we found a series open questions, leading us to establish a new research field in which we will progressively try to explore the mutation of self narratives and, more precisely, the meanings and possible consequences of a contemporary, technologically mediated autobiography.

The article above is worth reading if you really want to meditate on the existential angst of our algorithmic age, especially when it comes to the construction of identity.

What interests me is the larger issue of agency, and how our interplay or relationship with algorithmic systems can be shifted in favour of the user rather than the platform.

At a default level my response tends to be transparency. However the exercise discussed in today’s issue pushes my thinking in this regard. Yes transparency is important, but it’s also not enough. Transparency without agency can often just be torture.

Instead transparency is a gateway to wanting greater agency and interaction. Towards wanting greater authorship and flexibility when it comes to our identity.

As that raises another paradox of algorithmic identity. On the one hand it appears to be facilitating even greater (social) diversity.

However on the other hand it is also (subtly) imposing greater rigidity of identity, making it difficult for people to change or adopt a different (collective) identity.

A consequence of our autobiography being automatically recorded with our past following us as a permanent record wherever we go.

To what extent must we also foster forgiveness and acceptance of growth and change?